While mostly anecdotal, the available evidence suggests that students significantly increased their ability to see patterns and connections buried deeply in unstructured data sets, my first goal. This was particularly obvious in the graduate class where I required students to jot down their conclusions prior to class.

An example of the growth I witnessed from week one to week ten would likely be helpful at this point. The same student wrote both examples below and I consider this example to be representative of the whole:

Week 2 Response – “This game (The Space Game: Missions) was predominantly about budgets and space allocation…. Strategy and forethought go into where you place lasers, missile launchers and solar stations, so that you don’t run out of minerals to power those machines and so repair stations are available for those that are rundown. It’s clear that resource and space allocation are key elements for a player to win this game, just as it is for the Intelligence Community and analysts to win a war.”

Week 8 Response: “I think if Chess dropped acid it’d become the Thinking Machine. When the computer player was contemplating its next move colorful lines and curves covered the board… To me, Chess was always a one-on-one game; a conflict, if you will, between black versus white… Samuel Huntington states up front that he believes that conflict will no longer be about Princes and Emperors expanding empires or influencing ideologies, but rather about conflicts among different cultures: “The great divisions among humankind and the dominating source of conflict will be cultural.” Civilizations and cultures are not black and white, however; they’re not defined by nation-state borders. There are colors and nuances in culture requiring a change in mindset and in strategy to approach these new problems.”

While difficult to assess quantitatively, literature from the critical thinking community helps assess the degree of change here.

Note: There is a widespread belief among intelligence professionals that teaching critical thinking methods will improve intelligence analysis (See David Moore’s comprehensive examination of this thesis in his book Critical Thinking And Intelligence Analysis). A minority of authors are less willing to jump on this particular bandwagon (See Behavioral and Psychosocial Considerations in Intelligence Analysis: A Preliminary Review of Literature on Critical Thinking Skills by the Air Force Research Laboratory, Human Effectiveness Division) citing a lack of empirical evidence pointing to a correlation between critical thinking skills and improved analysis.

In particular, the Guide to Rating Critical Thinking developed by Washington State University

- Identification of the question or problem

- Willingness to articulate a personal position or argument

- Willingness to consider other positions or perspectives

- Identification and assessment of key assumptions

- Identification and assessment of supporting data

- Considers the influence of context on the problem

- Identifies and assesses conclusions, implications and consequences

While such a list may not be perfect, there is certainly nothing on it that is inconsistent with good intelligence practice. Likewise, when reading the representative example above with these criteria in mind, the increase in nuance, the willingness to challenge an acknowledged authority, the nimble leaps from one concept to another all become even more obvious. The growth evident in the second example is even more impressive when you consider that the Huntington reading was not required. The majority of the students in the class showed this kind of growth over the course of the term both in the quality of the classroom discussions and in their written reports.

In addition to seeing an improvement in students’ ability to detect deep patterns in complex and disparate data sets, I also wanted that increased ability to translate into better quality intelligence products for the decisionmakers who were sponsoring projects in the class.

Here the task was somewhat easier. I have solicited and received good feedback from each of the decisionmakers involved in the 78 strategic intelligence projects my students have worked on over the last 7 years. This feedback, leavened with a sense of the cognitive complexity of the requirement, yields a rough but useful assessment of how “good” each final project turned out to be.

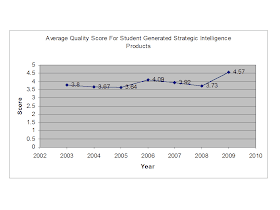

Mapping this overall assessment onto a 5 point scale (where a 3 indicates average “A” work, a 2 and 1 indicates below and well below "A" work respectively, a 4 indicates "A+" or young professional work and a 5 indicates professional quality work), permits a comparison of the average quality of the work across various years.

Note: “A” is average for the Mercyhurst Intelligence Studies seniors and second year graduate students permitted to take Strategic Intelligence. In order to be employable in this highly competitive field, the program requires students to maintain a cumulative 3.0 GPA simply to stay in the program and encourages students to maintain a 3.5 or better. In addition, the major is widely considered to be “challenging” and those who do not see themselves in the career of intelligence analysis, upon reflection, often change majors. As a result, GPAs of the seniors and second year graduate students who remain with the program are often quite high. The graduating class of 2010, for example, averaged a 3.66 GPA.

The chart above summarizes the results for each year. While the subjectivity inherent in some of the evaluations possibly influenced some of the individual scores, the size of the data pool suggests that some of these variations will be eliminated or at least smoothed out through averaging.

There are, to be sure, a number of possible reasons to explain the surge in quality evidenced by the most recent year group. The students could be naturally better analysts, the quality of instruction leading up to the strategic course could have dramatically improved, the projects could have been simpler or the results could be a statistical artifact.

None of these reasons, in my mind, however, hold true. While additional statistical analysis has yet to be completed, the hypothesis that games-based learning improves the quality of an intelligence product appears to have some validity and is, at least, worthy of further exploration.

My third goal for a games-based approach was to better lock in those ideas that would likely be relevant to future strategic intelligence projects attempted by the students, most likely after graduation. To get some sense if the games-based approach was successful in this regard, I sent each of the students in the three classes a letter requesting their general input regarding the class along with any suggestions for change or improvement. I sent these letters approximately five months after the undergraduate classes had finished and approximately 2.5 months after the end of the graduate class.

Seventeen of the 75 students (23%) who took one of the three courses responded to the email and a number of students stopped by to speak to me in person. In the end, over 40% of those who took the class responded to my request for feedback in one way or another. This evidence, while still anecdotal, was consistent – games helped the students remember the concepts better.

Comments such as, “Looking back, I can remember a lot of the concepts simply because the games remind me of them” or “I am of the opinion that the only reason that the [lessons] stood out was because they were different from any other class most students have taken” were often mixed in with suggestions on how to improve the course. The verbal feedback was even more encouraging, with reports of discussions and even arguments centered on the games and their “meaning” weeks and months after the course was completed.

The evidentiary record, in summary, is clearly incomplete but encouraging. Games–based learning appears to have increased intelligence students’ capacity for sensemaking, to have improved the results of their intelligence analysis and to allow the lessons learned to persist and even encourage new exploration of strategic topics months after the course had ended.

There are, to be sure, a number of possible reasons to explain the surge in quality evidenced by the most recent year group. The students could be naturally better analysts, the quality of instruction leading up to the strategic course could have dramatically improved, the projects could have been simpler or the results could be a statistical artifact.

None of these reasons, in my mind, however, hold true. While additional statistical analysis has yet to be completed, the hypothesis that games-based learning improves the quality of an intelligence product appears to have some validity and is, at least, worthy of further exploration.

My third goal for a games-based approach was to better lock in those ideas that would likely be relevant to future strategic intelligence projects attempted by the students, most likely after graduation. To get some sense if the games-based approach was successful in this regard, I sent each of the students in the three classes a letter requesting their general input regarding the class along with any suggestions for change or improvement. I sent these letters approximately five months after the undergraduate classes had finished and approximately 2.5 months after the end of the graduate class.

Seventeen of the 75 students (23%) who took one of the three courses responded to the email and a number of students stopped by to speak to me in person. In the end, over 40% of those who took the class responded to my request for feedback in one way or another. This evidence, while still anecdotal, was consistent – games helped the students remember the concepts better.

Comments such as, “Looking back, I can remember a lot of the concepts simply because the games remind me of them” or “I am of the opinion that the only reason that the [lessons] stood out was because they were different from any other class most students have taken” were often mixed in with suggestions on how to improve the course. The verbal feedback was even more encouraging, with reports of discussions and even arguments centered on the games and their “meaning” weeks and months after the course was completed.

The evidentiary record, in summary, is clearly incomplete but encouraging. Games–based learning appears to have increased intelligence students’ capacity for sensemaking, to have improved the results of their intelligence analysis and to allow the lessons learned to persist and even encourage new exploration of strategic topics months after the course had ended.

Next:

What did the students think about it?

Looking forward to the next one specifically :)

ReplyDeleteThe chart given in the post is giving accurate information for the average quality score for student generated strategic intelligence products.

ReplyDelete