Part 1 -- Introduction

Part 2 -- What Makes A Good Method?

Part 3 -- Bayesian Analysis (#5)

Part 4 -- Intelligence Preparaton Of The Battlefield/Environment (#4)

Part 5 -- Social Network Analysis (#3)

Multi Criteria Decision Making (MCDM) is a well-known and widely studied decision support method within the business and military communities. Some of the most popular variants of this method include the analytic heirarchy process, multi-attribute utility analysis and, in the US Army, at least, the staff study (see Annex D). There is even an International Society on Multiple Criteria Decision Making.

At its most basic level, MCDM is used to evaluate a variety of courses of action against a set of established criteria (see the image below for what a simple matrix might look like). One can imagine, for example, considering three different types of car and evaluating them for criteria such as speed, cost and fuel efficiency. MCDM would suggest that the car that has the highest total rating across those three categories would be the best car to buy. In fact, Consumer Reports uses exactly this type of method in its famous "circle charts" of everything from cars to hair care products.

MCDM is flexible and works with a wide variety of data but there are numerous devils in the details of its implementation. The simple example above gets increasingly complicated when we start to examine the many other criteria that someone might use to evaluate a car. Likewise, there are problems with rating each of these criteria (Is a car that gets 27 miles to the gallon really worse than a car that gets 27.1 miles to the gallon?). Even worse is when the analyst starts to think about abstract evaluation criteria such as which of the three cars is "coolest"? You start thinking like this and you begin to understand why they have an international society dedicated to this method...

MCDM is, at its heart, an operational methodology, not an intelligence method, however. That said, we have had very good luck “translating” it into an intelligence analysis method (i.e. MCIM) in our strategic intelligence projects. These projects have covered the gamut from large-scale national security studies to small-scale business studies. The matrices can be simple (I actually used such a matrix to evaluate the 5 methods mentioned in this series) or enormously complex (The MCIM matrix on the likely impact of chronic and infectious disease on US national security interests clocks in at 15 feet long when printed).

The key difference between the operational variant and the intelligence variant is perspective. In the operatonal variant, the analyst is trying to figure out his or her organization's best course of action. In the intelligence variant, the analyst puts him or herself in the shoes of the adversary and attempts to envision how the other side might see both the courses of action available and the criteria with which the adversary will evaluate them. The intelligence variant has not, to the best of my knowledge, been validated but we have a grad student working on it.

The research agenda for this method (as with many of the other methods discussed so far) is straightforward. First, it has to be validated as an intelligence specific methodology. The anecdotal evidence and the evidence in the operational literature is good but further testing needs to be done. Second, analysts need to figure out which variants of MCIM work best in which types of intelligence situations. Finally, we need to get the method out of the school house and into the field.

Next Week: #1!

Friday, December 12, 2008

Top 5 Intelligence Analysis Methods: Multi-Criteria Decision Making Matrices/Multi-Criteria Intelligence Matrices (#2)

Posted by

Kristan J. Wheaton

at

10:59 AM

1 comments

![]()

Labels: intelligence, intelligence analysis, intelligence methods, list, MCDM, MCIM

Thursday, December 11, 2008

Top 5 Intelligence Analysis Methods: Social Network Analysis (#3)

Part 1 -- Introduction

Part 2 -- What Makes A Good Method?

Part 3 -- Bayesian Analysis (#5)

Part 4 -- Intelligence Preparation Of The Battlefield/Environment (#4)

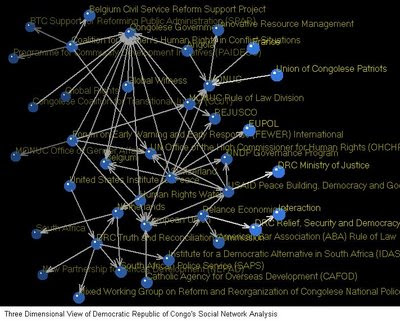

Social Network Analysis (SNA) is fundamentally about entities and the relationships between them. As a result, this method has a number of variations within the intelligence community ranging from techniques such as association matrices through link analysis charts right up to the validated mathematical models. It is most commonly used as a way to picture a network, however, and is rarely used in the more formal way envisioned by the sociologists who created the method. In other words, while SNA is a very powerful method, intelligence professionals rarely take advantage of its full potential.

Because it is primarily a visual method, most analysts (and the decisionmakers they support) immediately grasp the value of the method. Likewise, some variation of SNA will likely work with any data set where the entities and the relationships among those entities are important (in other words, almost every problem). Parsing all the relevant attributes can be difficult, however, and there are few automated solutions that work well with unstructured data sets. Likewise, at the higher levels of analysis, where the analyst is trying to do more than merely visualize a network, a good bit of special knowledge is required to understand the results.

Talking about SNA doesn't make a lot of sense though. It is much easier to grasp its value as an intel method by looking at some examples. I2's Analyst Notebook is widely available and examining their case studies is helpful in seeing what that tool can do. Another widely used tool, particularly for more formal analyses, is UCINET. For example, some of our students used this tool last year to examine the "social network" of government and non-governmental organizations engaged in security sector reform in sub-Saharan Africa (the image above comes from their study). Recently, my students and I have been playing around with a new tool from Carnegie Mellon University called ORA. It is very easy to use and very powerful.

Of the 5 methods I intend to discuss, SNA is the one with the widest visibility in all three major intelligence communities (national security, business and law enforcement). As such, there is already a good bit of research activity into how to better use this method in the intelligence analysis process. The big challenge, as I see it, is to design educational programs and tools that help analysts move away from the "pretty picture" possibilities presented by this method and toward the more rigorous results generated by the more formal application of the method.

Tomorrow: #2...

Posted by

Kristan J. Wheaton

at

11:37 AM

5

comments

![]()

Labels: intelligence, intelligence analysis, intelligence methods, list, SNA, social networks

Wednesday, December 10, 2008

And Now For Something Completely Different... (YouTube)

This video draws on an interesting line of research at the Visual Cognition Lab at the University of Illinois, specifically on their 2004 Ig Nobel prize winning paper, "Gorillas in Our Midst", published in Perception. The video used in this study (and other videos from the lab) are available for purchase. We use the "basketball video" as an introduction to a series of classes on cognitive biases.

Posted by

Kristan J. Wheaton

at

9:23 AM

0

comments

![]()

Labels: cognitive bias, Ig Nobel, video

Tuesday, December 9, 2008

Top 5 Intelligence Analysis Methods: Intelligence Preparation Of The Battlefield/Environment (#4)

Part 1 -- Introduction

Part 2 -- What Makes A Good Method?

Part 3 -- Bayesian Analysis (#5)

Intelligence Preparation of the Battlefield (IPB) is a time and battle tested method used by military intelligence professionals. Since its development over 30 years ago by the US Army, it has evolved into an increasingly useful and sophisticated analytic method. (Note: If you are interested in the Army's Field Manual on IPB, it is available through the FAS and many other places. We teach a very simplified version of IPB to our freshmen and you can download examples of their work on Algeria and Ethiopia (They are .kmz files, so you will need Google Earth to view the files).)

IPB is noteworthy for its flexibility. Its success in the field led to a variety of modifications and extensions of its basic concepts. The Air Force, for example, expanded IPB to what it has called Intelligence Preparation of the Battlespace and I know that, several years ago, the National Drug Intelligence Center developed a similar method for use in counter-narcotic operations. Today, the broadest variation on the IPB theme seems to be what NGA calls Intelligence Preparation of the Environment (IPE or sometimes Intelligence Preparation of the Operational Environment -- IPOE).

IPB/IPE/IPOE reminds me a bit of Sherlock Holmes in the The Sign of the Four: "Eliminate all other factors and the one which remains must be the truth." The fundamental concept of IPB – using overlapping templates to define a physical or conceptual space into go, slow-go and no-go areas – can clearly be applied to a variety of situations. It is obvious that this method is particularly useful in situations where geography is important. Mountains, rivers, etc. restrict movement options while roads and the like facilitiate movement. Overlaying weather effects and opposing forces' doctrine on top of this geography (combined with several other factors) can give a commander a good idea of what is possible, impossible and likely.

Simplify the concept even more (and remove it from its traditional military environment) and it begins to look like a Venn diagram with intersecting circles useful in any situation that can be thought of, either concretely or abstractly, as a landscape. Imagine, for example, a business competitor in which we are interested. We suspect that it is preparing to launch a new product. How would we translate IPB into this environment? Perhaps we could see the various product lines where our competitor operates as "avenues of approach". The competitor's capabilities could be defined by its patent portfolio and financial situation. The competitor's "doctrine" could be extrapolated, perhaps, from its historical approach to new product launches. The validity of this and other similar approaches in other fields is, however, largely untested.

By defining, in advance, the relevant ways to group the data available for analysis, IPB is able to effectively deal with large quantities of both strutured and unstructured data. While these groupings are typically quite general, they are finite and it is possible for relevant data to fall through the cracks between the groups. Likewise, as the relevant groupings of data begin to proliferate, the method quickly moves from one which is simple in concept to one which is complex in applicaton (The US Army's IPB manual is 270+ pages...).

For me, the research challenges here are straightforward. The military has a clear lock on developing this method within its environment; there is little value added for academia here. Beyond the military confines, however, the research possibilities are wide open. Does this method or some variation of it work in business? How best to define it in law enforcement situations? Could it work against gangs? In hostage situations? Crime mappers, in particular, might be able to utilize some of these concepts to further refine their art.

Tomorrow: Method #3...

Posted by

Kristan J. Wheaton

at

8:41 AM

3

comments

![]()

Labels: intelligence, intelligence analysis, intelligence methods, IPB, list

Monday, December 8, 2008

Top 5 Intelligence Analysis Methods: Bayesian Analysis (# 5)

Part 1 -- Introduction

Part 2 -- What Makes A Good Method

(Note: Bayesian statistical analysis is virtually unknown to most intelligence analysts. This is unfortunate but true. At its core, Bayes is simply a way to rationally update previous beliefs when new information becomes available. That sounds like what intelligence analysts do all the time but it has that word "statistics" associated with it, so, even analysts who have heard of Bayes often decide to give it a miss. If you are interested in finding out more about Bayes, you can always check out Wikipedia but I find even that article a bit dense. I prefer Bayes For Beginners -- which is what I am.)

Bayes is the “Gold Standard” for analytic conclusions under conditions of uncertainty and probably ought to be closer to -- if not at the -- top of this list. It provides a rigorous, logical and thoroughly validated process for generating a very specific estimative judgment. It is also enormously flexible and can, theoretically, be applied to virtually any type of problem.

Theoretically. Ahhh... There, of course, is the rub. The problem with Bayes lies in its perceived complexity and, to a lesser degree, the difficulty in using Bayes with large sets of unstructured, dynamic data.

- Bayes, for many people, is difficult to learn. While the equation is relatively simple, its results are often counterintuitive. This is true, unfortunately, for both the analysts and the decisionmakers that intelligence analysts support. It doesn't really matter how good the intelligence analyst is at using Bayes if the decisionmaker will not trust the results at the end of the process because they come across as a lot of statistical hocus-pocus or, even worse, simply "seem" wrong.

- While Sedlmeier and Gigarenzer have had some luck teaching Bayes using so-called natural frequencies (and we, at Mercyhurst, have had some luck replicating his experiments using intelligence analysts instead of doctors), the seeming complexity of Bayes is one of the major hurdles to overcome in using this method effectively.

- In addition to the complexities of Bayes, it appears that this method, which works well with small, well-defined sets of any kind of data, does not handle large volumes of dynamic, unstructured data very well.

- Bayes seems to me to work best as an intelligence analysis method when an analyst is confronted with a situation where a new piece of information appears to significantly alter the perceived probabilities of an event occurring. For example, an analyst thinks that the odds of a war breaking out between two rival countries are quite low. Suddenly, a piece of information comes in that suggests that, contrary to the analyst’s belief, the countries are, in fact, at the brink of war. A Bayesian mindset helps to ratchet back those fears (which are actually best described by the recency and vividness cognitive biases).

- The real world doesn't present its data in ones, however, and not all data should be weighted the same. When analysts try to go beyond having a "Bayesian mindset" and apply Bayes literally to real world problems (as we have on several occasions), they run into problems. Think about the recent terrorist attacks in Mumbai. Arguably, the odds of war between India and Pakistan were quite low before the attacks. As each new piece of data rolled in, how did it change the odds? More importantly, how much weight should the analyst give that piece of data (particularly given that the analyst does not know how much data, ultimately, will come in before the event is "over")? Bayes is easier to apply if we treat "Mumbai Attack" as a single data point but does that make sense given that new data on the attack continues to come in even now?

- Bayes, in essence, is digital but life is analog. Figuring out how to "bin" or group the data rationally with respect to real-world intelligence problems is one of the biggest hurdles to overcome, in my estimation, with using Bayes routinely in intelligence analysis

Bayesian statistical analysis has enormous potential for the intelligence community. 20 years from now we will all likely have Bayesian widgets on our desktops that help us figure out the odds -- the real odds, not some subjective gobbledy-gook -- of specific outcomes in complex problems (In much the same way that Bayes powers some of the most effective and powerful spam filters today). The research agenda to get closer to this "Golden Age Of Bayesian Reasoning" is straightforward (but difficult):

- Figure out how to effectively and efficiently teach the basics of Bayes to a non-technical audience.

- Actually teach those basics to both analysts and decisionmakers so that both will have an appropriate "comfort level" with the fundamental concepts.

- Develop Bayesian-based tools (that are reasonably simple to use and in which analysts can have confidence) that deal with large amounts of unstructured information in a dynamic environment.

Anyone got any extra grant money lying around?

Tomorrow -- Method #4...

Posted by

Kristan J. Wheaton

at

8:20 AM

6

comments

![]()

Labels: Bayes, intelligence, intelligence analysis, intelligence methods, list