We have done much work in the past on mitigating the effects of cognitive biases in intelligence analysis, as have others.

(For some of our work, see Biases With Friends, Strawman, Reduce Bias In Analysis By Using A Second Language or Your New Favorite Analytic Methodology: Structured Role Playing.)

(For the work of others, see (as if this weren't obvious) The Psychology of Intelligence Analysis or Expert Political Judgment or IARPA's SIRIUS program.)

This post, however, is indicative of where we think cognitive bias research should go (and in our case, is going) in the future.

Bottomline: Reducing bias in intelligence analysis is not enough and may not be important at all.

What analysts should focus on is forecasting accuracy. In fact, our current research suggests that a less biased forecast is not necessarily a more accurate forecast. More importantly, if indeed bias does not correlate with forecasting accuracy, why should we care about mitigating its effects?

In a recent experiment with 115 intel students, I investigated a mechanism that I think operates at the root of the cognitive bias polemic: Evidence weighting.

Having surveyed the cognitive bias literature, key phrases began to stand out such as:

A positive-test strategy (Ed. Note: we are talking about confirmation bias here) is "the tendency to give greater weight to information that is supportive of existing beliefs" (Nickerson 1998). In this way, confirmation bias not only appears in the process of searching for evidence, but in the weighting and diagnosticity we assign to that evidence once located.

The research of Cheikes et al. (2004) and Tolcot et al. (1989) states that confirmation bias "was manifested principally as a weighting and not as a distortion bias." Further, the Cheikes article indicates that "ACH had no impact on the weight effect," having tested both elicitations of the bias (in both evidence selection and evidence weighting).

Emily Pronin (2007), the leading authority on Bias Blind Spot, presents a similar conclusion: "Participants not only weighted introspective information more in the case of self than others, but they concurrently weighted behavioral information more in the case of others than self."

Robert Jervis, professor of International Affairs at Columbia University, speaks about evidence-weighting issues in the context of the Fundamental Attribution Error in his 1989 work Strategic Intelligence and Effective Policy.What if the impact of bias in analysis is less about deciding which pieces of evidence to use and more about deciding how much influence to allocate towards each specific piece? This would mean that to mitigate the effects of cognitive bias and to improve forecasting accuracy, training programs should focus on teaching analysts how to weight and assess critical pieces of evidence.

With that question in mind, I designed a simple experiment with four distinctly testable groups to assess the effects of evidence weighting on a) cognitive bias and b) forecasting accuracy.

Each of the four groups were required to spend approximately one hour conducting research on the then-upcoming Honduran presidential election to determine a) who was most likely to win and b) how likely they were to win (in the form of a numerical probability estimate, e.g. "X is 60 percent likely to win"). Each group, however, used varying degrees of Analysis of Competing Hypotheses (ACH), allowing me to manipulate how much or how little the participants could weight the evidence. A description of each of the four groups is below:

- Control group (Cont, N=28). The control group was not permitted to use ACH at all. They had one hour to conduct research independently with no decision support tools.

- ACH no weighting (ACH-NW, N=30). This group used ACH. Participants used the PARC 2.0.5 ACH software without the ability to use II (highly inconsistent) or CC (highly consistent) functions. Nor were they allowed to use the credibility or the relevance functions.

- ACH with weighting (ACH-W, N=30). This group used ACH as they had been instructed, including II, CC and relevance, but not credibility.

- ACH with training (ACH-T, N=27). This was the focus group for the experiment. Participants in this group, which used ACH with full functionality (excluding credibility), first underwent a 20-minute instructional session on evidence weighting and source reliability employing the Dax Norman Source Evaluation Scale and other instructional material. In other words, these participants were instructed how to weight evidence properly.

The results were intriguing:

The group with the most accurate forecasts (79 percent) was the control group, or the group that did not use ACH at all (See Figure 1). The next most accurate group (65 percent) was the ACH-T group, or the ACH "with training."

Due to the small sample sizes, these differences did not turn out to be statistically significant which, in turn, suggests the first major point: That training in cognitive bias mitigation and some structured analysis techniques might not be as useful as originally thought.

If this were the first time these kinds of results had been found, it might be possible to chalk it up to some sort of sampling error. But Drew Brasfield found much the same thing when he ran a similar experiment back in 2009 (The relevant charts and texts are on pages 38-39). In Brasfield's case, participants were statistically significantly less biased when they used ACH but forecasting accuracy remained statistically significantly the same (though, in this experiment, the ACH group technically outperformed the Control).

These results also suggest that more accurate estimates came from analysts who either a) intuitively weighted evidence without the help of a decision tool or b) were instructed how to use the decision tool with special focus on diagnosticity and evidence weighting. This could mean that analysts, when given the opportunity to weight evidence without knowing how much weighting and diagnosticity impacts results, weight incorrectly out of perceived obligation to do so or misunderstanding.

Finally, the next lowest forecasting accuracy was obtained by the group ACH-NW (53 percent) in which the analysts were not allowed to weight evidence at all (no IIs or CCs). The lowest accuracy (only 45 percent) was obtained by the group that was permitted to weight evidence with the ACH decision tool but were not instructed how to do so nor were they informed how this weighting might influence the final inconsistency scores of their hypotheses. This final difference was statistically significant from the control suggesting that a failure to train how to weight evidence appropriately actually generates lower forecasting accuracy.

If that weren't enough, let's take one more interesting look at the data...

In terms of analytic accuracy, the hierarchy is as follows (from most to least accurate): Control, ACH-T, ACH-NW, ACH-W.

Now, in terms of most biased, the hierarchy looks something like this (from least to most biased):

- Framing: ACH-T, ACH-W, ACH-NW, Control

- Confirmation: ACH-W, ACH-T, Control, ACH-NW

- Representativeness: ACH-W, ACH-NW, Control, ACH-T

What this shows is an (albeit imperfect) inverse to analytic accuracy. In other words, the more accurate groups were also more biased, and while ACH generally helped mitigate bias, it did not improve forecasting accuracy (in fact, it may have done the opposite). If this experiment achieved its goal and effectively measured evidence weighting as an underlying mechanism of forecasting accuracy and cognitive bias, it supports the claim made by Cheikes et al. above: "ACH had no impact on the weight effect" (again, talking about confirmation bias) and, as mentioned, recreates the results found by Brasfield.

While the evidence weighting hypothesis is obviously in need of further investigation, this preliminary experiment provided initial results with some intriguing implications, the most impactful of which is that, while the use of ACH reduces the effects of cognitive bias, it may not improve forecasting accuracy. A less biased forecast is not necessarily a more accurate forecast.

***

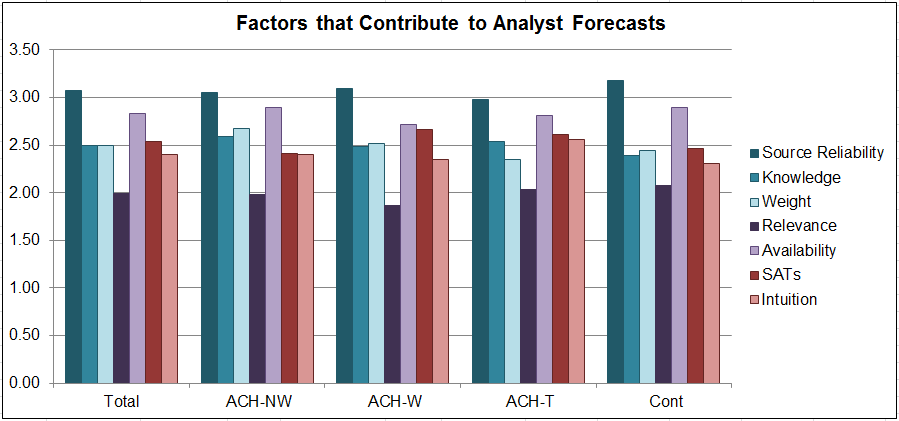

As a side note, I wanted to include this self-reported data which shows the components that the 115 analysts in this experiment indicated were most influential in their final analytic estimates in general. Note that they indicate that source reliability and availability of information seem to be the top two (See Figure 2).

|

| Figure 2. Self-Reported Survey Data of 115 Analysts Indicating Factors That Most Influence Their Analytic Process Scale = 1 - 4 |

REFERENCES

Cheikes, B. A., Brown, M. J., Lehner, P. E., & Adelman, L. MITRE, Center for integrated intelligence systems. (2004). Confirmation bias in complex analyses (51MSR114-A4). Bedford, MA.

Jervis, R. (1989). Strategic intelligence and effective policy. In Frank Cass (Ed.), Intelligence and security perspectives for the 1990s. London, UK.

Nickerson, R. S. (1998). Confirmation bias: An ubiquitous phenomenon in many guises. Review of general psychology, 2(2), 175-220.

Pronin, E., & Kugler, M. B. (2007). Valuing thoughts, ignoring behavior: The introspection illusion as a source of the bias blind spot. Journal of experimental social psychology, 43, 565-578.

Tolcott, M. A., Marvin, F. F., & Lehner, P. E. (1989). Expert decisionmaking in evolving situations. IEEE transactions on systems, man, and cybernetics, 19(3), 606-615.